As Moore’s Law begins to slow, the search is on for new ways to keep the exponential rise in processing speeds going. New research suggests that an exotic approach known as “lightwave electronics” could be a promising new avenue.

While innovation in computer chips is far from dead, there are signs that the exponential increase in computing power we’ve gotten used to over the past 50 years is starting to slow. As transistors shrink to almost atomic scales, it’s becoming harder to squeeze ever more onto a computer chip, undercutting the trend that Gordon Moore first observed in 1965: that the number doubled roughly every two years.

But an equally important trend in processing power petered out much earlier: “Dennard scaling,” which stated that the power consumption of transistors fell in line with their size. This was a very useful tendency, because chips quickly heat up and get damaged if they draw too much power. Dennard scaling meant that every time transistors shrank, so did their power consumption, which made it possible to run chips faster without overheating them.

But this trend came unstuck back in 2005 due to the increased impact of current leakage at very tiny scales, and the exponential rise in chip clock rates petered out. Chipmakers responded by shifting to multi-core processing, where many small processors run in parallel to complete jobs faster, but clock rates have remained more or less stagnant since then.

Now though, researchers have demonstrated the foundations of a technology that could allow clock rates one million times higher than today’s chips. The approach relies on using lasers to elicit ultra-fast bursts of electricity and has been used to create the fastest-ever logic gate—the fundamental building block of all computers.

So-called “lightwave electronics” relies on the fact that it’s possible to use laser light to excite electrons in conducting materials. Researchers have already demonstrated that ultra-fast laser pulses are able to generate bursts of current on femtosecond timescales—a millionth of a billionth of a second.

Doing anything useful with them has proven more elusive, but in a paper in Nature, researchers used a combination of theoretical studies and experimental work to devise a way to use this phenomena for information processing.

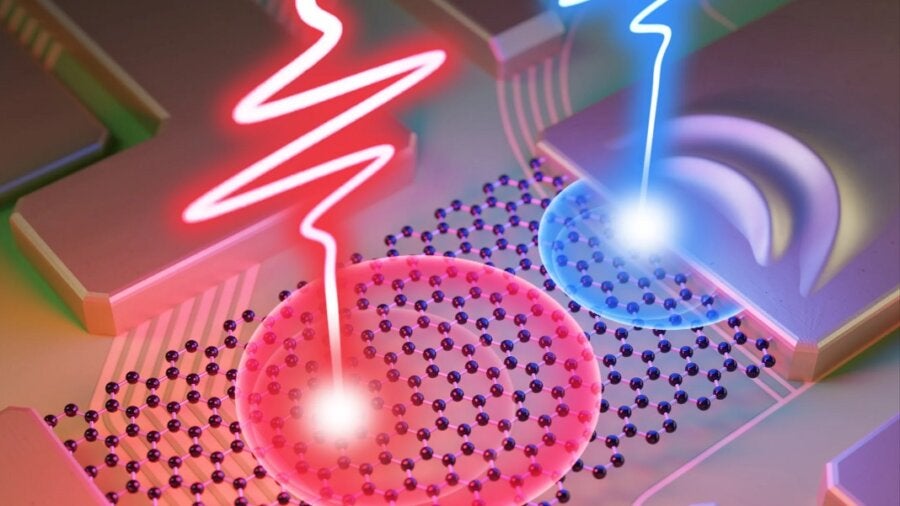

When the team fired their ultra-fast laser at a graphene wire strung between two gold electrodes, it produced two different kinds of currents. Some of the electrons excited by the light continued moving in a particular direction once the light was switched off, while others were transient and were only in motion while the light was on. The researchers found that they could control the type of current created by altering the shape of their laser pulses, which was then used as the basis of their logic gate.

Logic gates work by taking two inputs—either 1 or 0—processing them, and providing a single output. The exact processing rules depend on the kind of logic gate implementing them, but for example, an AND gate only outputs a 1 if both its inputs are 1, otherwise it outputs a 0.

In the researchers’ new scheme, two synchronized lasers are used to create bursts of either the transient or permanent currents, which act as the inputs to the logic gate. These currents can either add up or cancel each other to provide the equivalent of a 1 or 0 as an output.

And because of the extreme speeds of the laser pulses, the resulting gate is capable of operating at speeds in the petahertz, which is one million times faster than the gigahertz speeds that today’s fastest computer chips can manage.

Obviously, the setup is vastly larger and more complex than the simple arrangement of transistors used for conventional logic gates, and shrinking it down to the scales required to make practical chips will be a mammoth task.

But while petahertz computing is not around the corner anytime soon, the new research suggests that lightwave electronics could be a promising and powerful new avenue to explore for the future of computing.

Image Credit: University of Rochester / Michael Osadciw

* This article was originally published at Singularity Hub

0 Comments