Looks aren’t everything. But they must be something, otherwise dating apps like Tinder and Bumble wouldn’t be having nearly as much success as they are. Unfortunately, you can’t tell just from someone’s appearance whether you’re likely to get along with them—and equally importantly when it comes to the world of swiping, whether they find you attractive in return.

A new AI could throw a wrench in the already-overwhelming world of dating apps. Developed by a team from the University of Helsinki and Copenhagen University, the artificially intelligent system was able to generate images of fake faces that it knew particular users would find attractive—because those same users’ brain activity played a part in training the AI. It sounds creepy, futuristic, and like the ultimate catfishing opportunity, right? Here’s how it works.

The system, which was detailed in a paper published in IEEE Xplore in February, uses a generative adversarial network, or GAN, to create fake faces. The word “adversarial” is in there because a GAN is made up of two different neural networks competing against one another. There’s the generator network, which generates data (in this case, images) similar to what it saw in its training data. The discriminator network, meanwhile, tries to pick out which images are fake and which are real (the fake images created by the generator are mixed with real images from the training data). As the cycle is repeated over and over, the generator gets better at creating realistic images, while the discriminator gets better at picking out the fake ones. Talk about symbiosis!

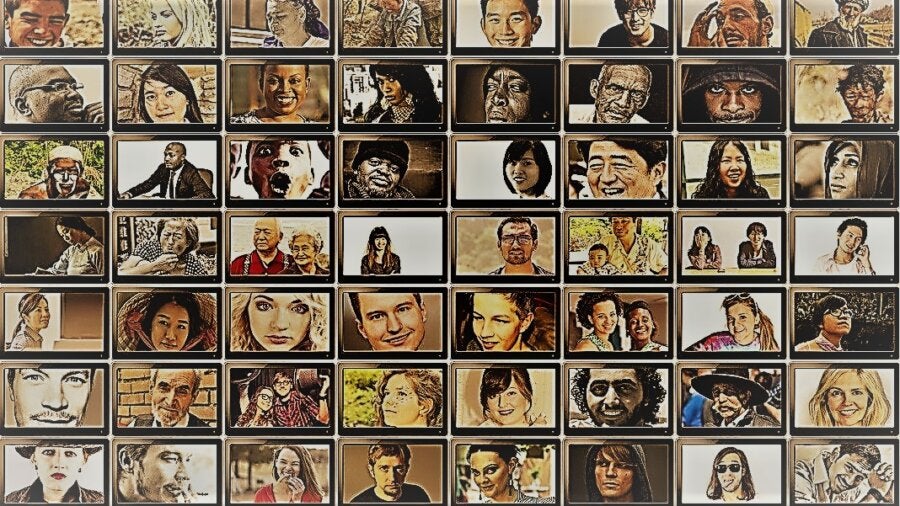

The researchers trained their GAN with 200,000 images of celebrities. We all know celebrities don’t get famous by being unattractive, so needless to say, this neural network saw a lot of pretty people—or, at least, people who’d be considered pretty according to conventional Hollywood standards. True beauty is in the eye of the beholder, of course.

The celebrity-soaked system then conjured hundreds of images of imaginary people, and these were shown to 30 real people (study participants) whose brain activity was being monitored. This was accomplished with electroencephalography (EEG), which uses a network of electrodes and wires to pick up the electrical signals of neurons firing in the brain. Perhaps unsurprisingly, there was an increase in brain activity when participants were shown an image of a face they found attractive (though this was at least partially due to the fact that the participants had been specifically instructed to focus on faces they thought were attractive).

Participants didn’t have to be conscious of why they found a given face attractive or which of its features appealed to them (Wide-set eyes? High cheekbones? A big nose?); the system stored the data of each face a participant liked, then found the commonalities between them, distilling the data points down to specific features; apparently we humans tend to be pretty unoriginal and feel attraction to the same attributes over and over again.

The team then took the data showing which features each participant found appealing and fed it back into the GAN. The result? Custom-made fake faces that combine all of one’s favorite traits. Curly hair? Check. Chiseled jawline? Check. Eyes like black coffee? Yup. If only the faces were real—and belonged to people who wanted to date you.

When the fake faces generated by individuals’ preferences were shown back to them (mixed in with control images), participants rated them as attractive 87 percent of the time. What about the other 13 percent, you ask? Well, they were either too perfect (can something be so beautiful it’s ugly?), or their particular combination of facial features was a bit… off. They were, after all, not real.

As such, there are certainly some sinister ways technology like this could be used—and the faces don’t need to be attractive, they just need to look real. Any circumstances where it would be useful to have fake people—like profile photos for dummy social media accounts used to manipulate online discourse—are a ready target for technological treachery.

Luckily, the research team has some productive, non-catfish applications for their system in mind. “This could help us to understand the kind of features and their combinations that respond to cognitive functions, such as biases, stereotypes, but also preferences and individual differences,” Tuukka Ruotsalo, an associate professor at the University of Helsinki, told Digital Trends.

Image Credit: Gerd Altmann from Pixabay

* This article was originally published at Singularity Hub

0 Comments