The lenses could give soft robots the ability to ‘see’ without electronics.

Our eyes naturally adjust to the visual world. From reading small fonts on a screen to scanning lush greenery in the great outdoors, they automatically change their focus to see everything near and far.

This is a far cry from camera systems. Even top-of-the-line offerings, such as full-frame mirrorless cameras, require multiple bulky lenses to cover a wide range of focal lengths. For example, photographers use telephoto lenses to film wildlife at a distance and macro lenses to capture the fine details of small things up close—say, a drop of morning dew on a flower.

In contrast, our eyes are made of “soft, flexible tissues in a highly compact form,” Corey Zhang and Shu Jia at Georgia Institute of Technology recently wrote.

Inspired by nature, the duo engineered a highly flexible robotic lens that adjusts its curvature in response to light, no external power needed. Added to a standard microscope, the lens could zero in on individual hairs on an ant’s leg and the lobes of single pollen grains.

Called a photoresponsive hydrogel soft lens (PHySL), the system could be especially useful for mimicking human vision in soft robots. It could also open the door to a range of uses in medical imaging, environmental monitoring, or even as an alternative camera in ultra-light mobile devices.

Artificial Eyes

We’re highly visual creatures. Roughly 20 percent of the brain’s cortex—four to six billion neurons—is devoted to processing vision.

The process begins when light hits the cornea, a clear dome-shaped structure at the front of our eyes. This layer of tissue begins focusing the light. The next layer is the colored part of the eye and the pupil. The latter dilates at night and shrinks by day to control the amount of light reaching the lens, which sits directly behind the pupil.

A flexible structure reminiscent of an M&M, the lens focuses light onto the retina, which then translates it into electrical signals for the brain to interpret. Eye muscles change focal length by physically pulling the lens into different shapes. Working in tandem with the cornea, this flexibility allows us to change what we’re focusing on without conscious thought.

Despite their delicate nature and daily use, our eyes can remain in working order for decades. It’s no wonder scientists have tried to engineer artificial lenses with similar properties. Biologically inspired eyes could be especially helpful in soft robots navigating dangerous terrain with limited power. They could also make surgical endoscopes and other medical tools more compatible with our squishy bodies or help soft grippers pick fruit and other delicate items without bruising or breaking them.

“These features have prompted substantial efforts in bioinspired optics,” wrote the team. Several previous attempts used a fluid-based method, which changes the curvature—and hence, focal length—of a soft lens with external pressure, an electrical zap, or temperature. But these are prone to mechanical damage. Other contraptions using solid hardware are sturdier, but they require heavier motors to operate.

“The optics needed to form a visual system are still typically restricted to rigid materials using electric power,” wrote the team.

New Perspective

The new system brought two fields together: Adjustable lenses and soft materials.

The system’s lens is made of PDMS, a lightweight and flexible silicon-based material used in the likes of contact lenses and catheters.

The other component acts like artificial muscles to change the curvature of the lens. It’s fabricated with a biocompatible hydrogel and dusted with a light-sensing chemical. Heating the chemical sensor causes the gel to change its shape.

The team combined these two parts into a soft robotic eye, with the hydrogel surrounding the central lens. When exposed to heat—such as that stemming from light—the gel releases water and contracts. As it shrinks, the lens flattens and its focal length increases, allowing the eye to resolve objects at greater distances.

Depriving the system of light—essentially like closing your eyes—cools the gel. It then swells to its original plumpness, releases tension, and the lens resets.

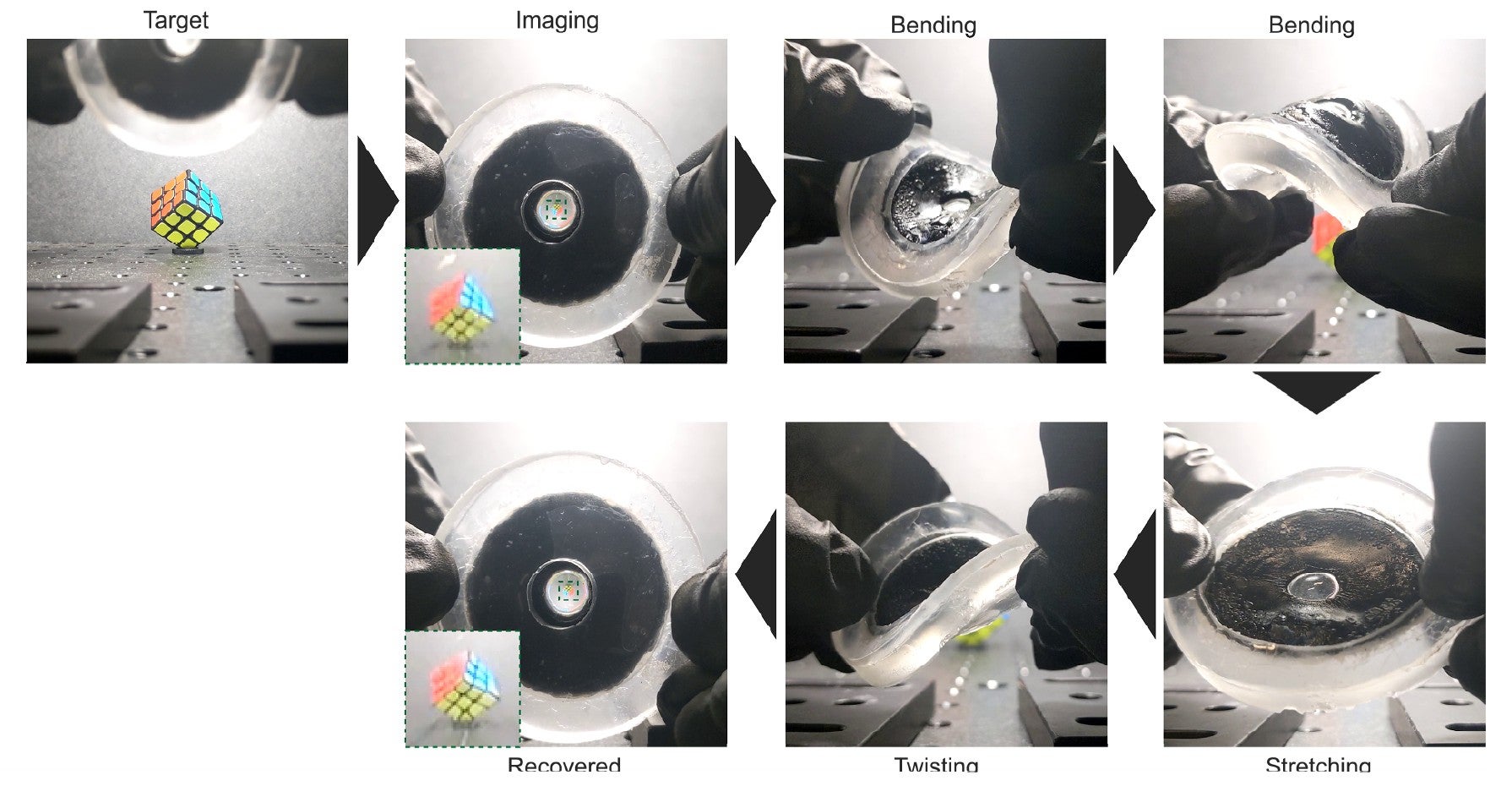

The design offers better mechanical stability than previous versions, wrote the team. Because the gel constricts with light, it can form a stronger supporting structure that prevents the delicate lens from bending or collapsing as it changes shape. The robotic eye worked as expected across the light spectrum, with resolution and focus comparable to the human eye. It was also durable, maintaining performance after multiple cycles of bending, twisting, and stretching.

With additional tinkering, the system proved to be an efficient replacement for traditional glass-based lenses in optical instruments. The team attached the squishy lens to a standard microscope and visualized a range of biological samples. These included fungal fibers, microscopic hairs on an ant’s leg, and the gap between a tick’s claws—all sized roughly a tenth of the width of a human hair.

The team wants to improve the system too. Recently developed hydrogels respond faster to light with more powerful mechanical forces, which could improve the robotic eye’s focal range. The system’s heavy dependence on temperature fluctuations could limit its use in extreme environments. Exploring different chemical additives could potentially shift its operating temperature range and tailor the hydrogel to particular uses.

And because the robotic eye “sees” across the light spectrum, it could in theory mimic other creature’s eyes, such as mantis shrimp, which can detect color differences invisible to humans, or reptilian eyes that can capture UV light.

A next step is to incorporate it into a soft robot as a biologically inspired camera system that doesn’t rely on electronics or extra power. “This system would be a significant demonstration for the potential of our design to enable new types of soft visual sensing,” wrote the team.

The post A Squishy New Robotic ‘Eye’ Automatically Focuses Like Our Own appeared first on SingularityHub.

* This article was originally published at Singularity Hub

0 Comments